Note: All stats and figures below are true only at the time of writing, and will certainly change over time.

Running a massively popular website and asset resource while being funded primarily by donations has always been a core challenge of Poly Haven.

It’s easy to throw a bunch of files on Amazon S3 and call it a day, but you’re gonna rack up a bunch of bills that you simply cannot afford to pay.

Serving 80TB of bandwidth from S3 is estimated to cost around $4,000 per month alone, which is coincidentally about the same amount that is being donated to us on Patreon currently.

This obviously leaves zero budget for actually creating assets, nevermind all our other costs.

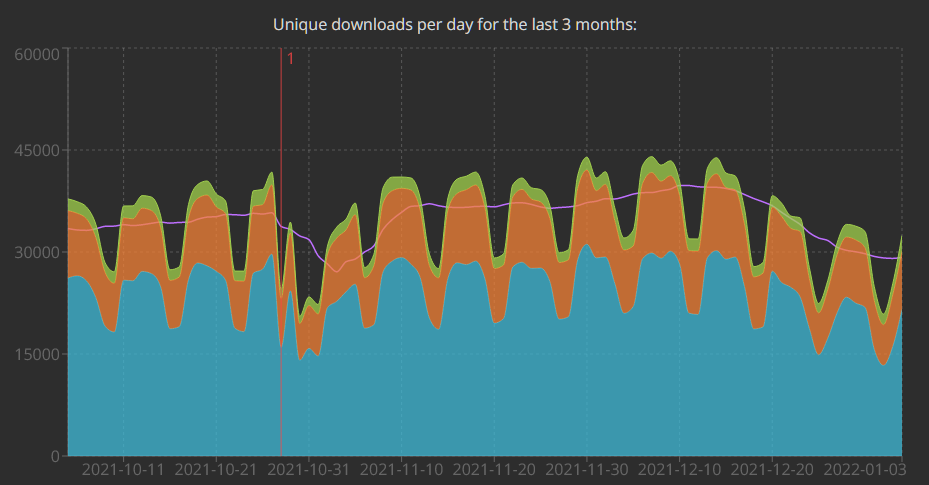

So how the heck do we manage to run a website with 5 million pageviews and 80 terabytes of traffic every month for under $400?

Cloudflare All the Way

This web giant is probably the reason we can do what we do so easily and so affordably. Sure, we could live without them, but it’d be a lot more work.

Cloudflare, for us, is primarily a massive caching layer.

What does that mean? When someone in London downloads a file for example, the file is fetched from our origin server (more on that later) but routed through one of Cloudflare’s nearby edge nodes.

Then, the next time someone in London downloads the same file, Cloudflare has cached it and serves it to them directly, without needing to bother our origin server.

We can define how long a particular file or URL should be cached for using a set of rules, so that rarely changing content (like asset files) can stay cached as long as possible, while content that changes every day (e.g. our list of assets) is updated more often.

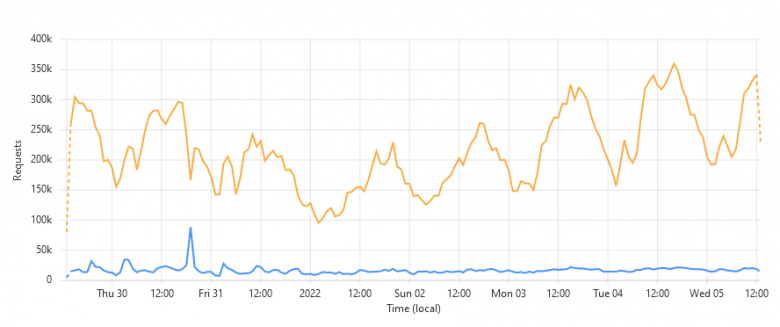

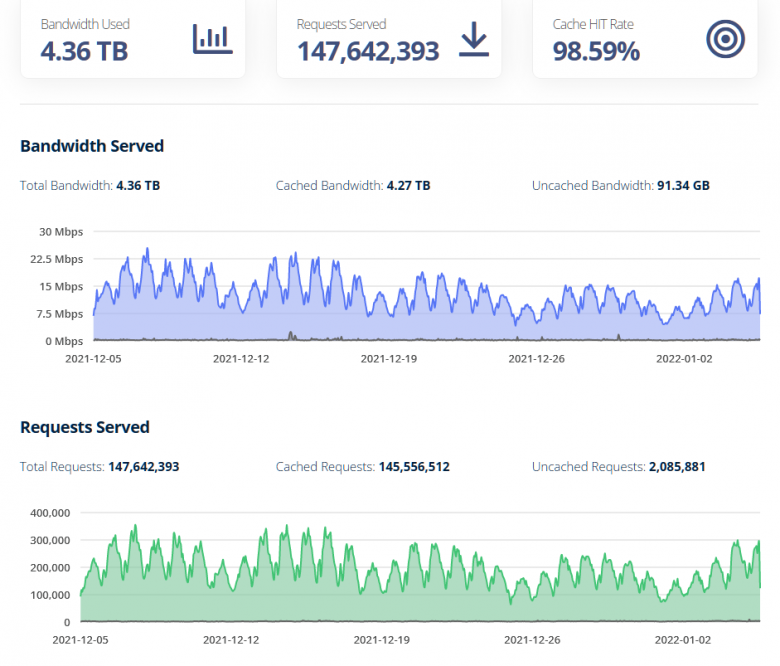

For asset downloads, these cached downloads make up for about 85% of all traffic.

This doesn’t only apply to file downloads however, it’s also used for everything else on the website too.

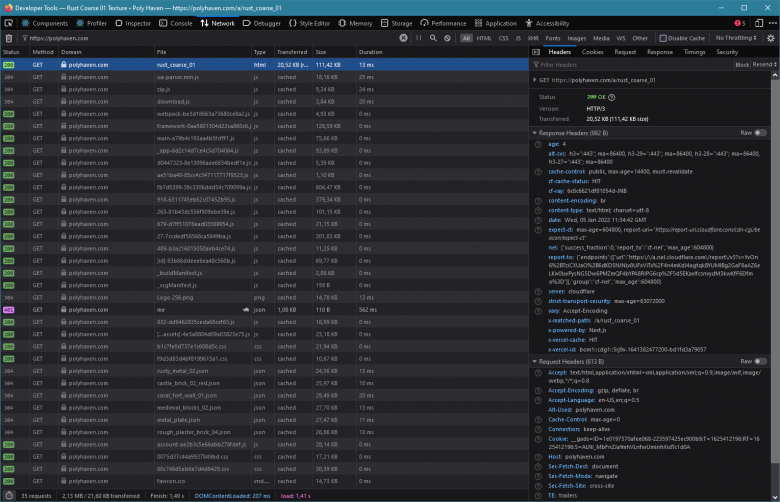

Every time you load a page, your browser sends a few dozen requests (think stylesheets, javascript, json data…), most of which is static.

Because of Argo (which I’ll explain below) Cloudflare can cache this kind of traffic even better than the huge asset files, resulting in about a 93% cache ratio.

All this means that our origin server(s) have a lot less work to do, which means they’re theoretically more affordable.

And what does this amazing caching layer cost us?

$40 per month.

Cloudflare’s Pro membership costs $20 per domain, and we have two domains: polyhaven.com for the main website, and polyhaven.org for the asset downloads.

Backblaze Bandwidth Alliance

So after Cloudflare, we only have to handle about 15% of our 80TB of monthly traffic. That’s still not nothing though, and it would cost us about $650 using Amazon S3.

S3 is not the only player in the game though, there are other more budget-friendly cloud providers.

The one we use is Backblaze – another internet giant that’s more known for its consumer backup service.

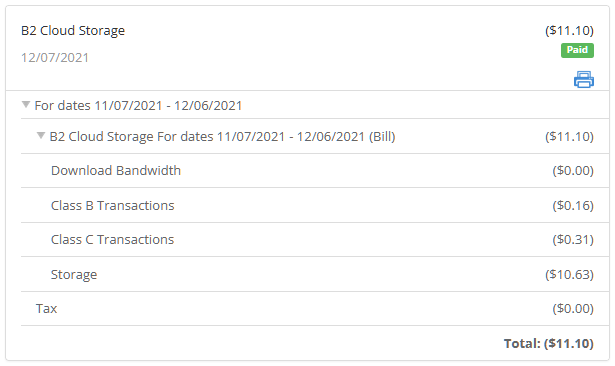

Alone, Backblaze’s B2 clone of S3 service would cost us about $130 per month for that last 15% that Cloudflare doesn’t handle.

But, we can get around that because of a partnership between Backblaze and Cloudflare they call the Bandwidth Alliance.

As long as we use both services together, and pay for the $20 cloudflare subscription, we don’t get charged for download traffic at all.

All we have to pay is the storage fee, some upload costs and API requests, which comes to around $11 per month.

Web “Server”

So that covers all of our costs for hosting the asset files themselves so far, but we still need a website to show them on.

polyhaven.com is built with Next.js – a javascript framework created by Vercel.

While you can absolutely run a Next.js application on your own servers or half a dozen other cloud providers, Vercel offers a fairly straight forward and affordable service to deploy your web application with them directly.

This saves us the hassle of managing our own server, while having the added benefit of being a serverless environment which improves performance for users all around the world.

Their base fee is $20 per month, with additional costs based on usage.

Since we use Cloudflare in front of Vercel and are super careful about what can be cached and what can’t (e.g. anything requiring user authentication), we generally don’t go over the included usage limits unless something goes terribly wrong.

The Database

An important part of any content website for sure – this is where we store all the information about every asset, lists of every file for each of them, download logs, etc.

Back when we still ran hdrihaven.com and the other sites separately, all on their own manually managed LAMP stacks, the databases were the first to start suffering from performance issues.

To avoid this problem in the future, I decided to splurge a bit and go for a cloud solution where I wouldn’t have to worry about reliability, performance, scaling or integrity ever again: Google Firestore.

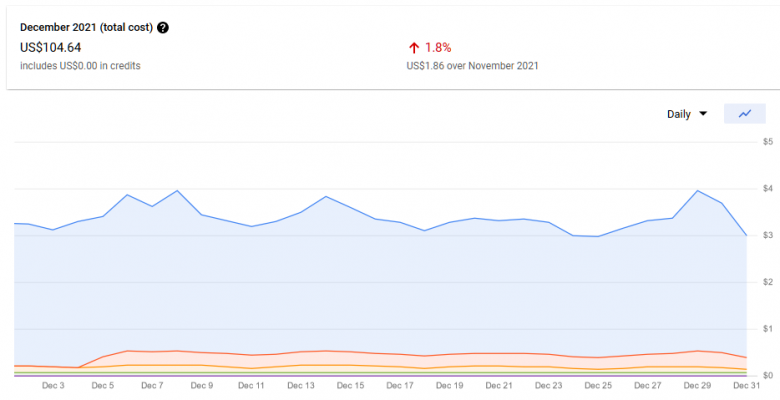

This is certainly not the cheapest option, at around $100 per month it accounts for about half of our monthly web budget, but I still think it’s the right way to go.

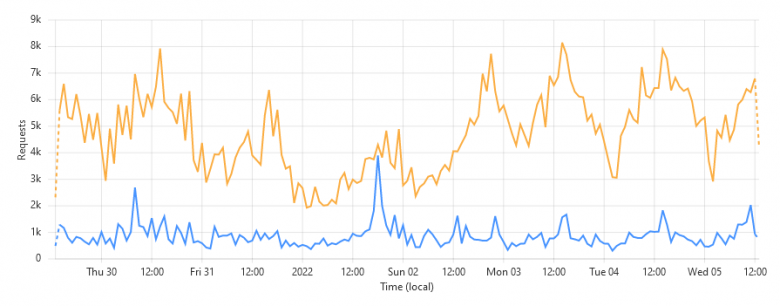

With Firestore, you pay per usage beyond the free tier. For us, this is primarily database reads (the blue line above).

Every time a bit of data is fetched from the database, we pay a tiny amount. Per read it’s practically insignificant, at $0.0000006. But multiply this by the number of document reads you have to do per page view (e.g. on our library page, that’s one read per asset, 866 currently), multiplied by the number of page views (~5M per month) and it can get very expensive very quickly.

To avoid database reads as much as possible, we cache as much as possible. Our data doesn’t change that often, or when it does (e.g. download counts) it’s not very important to show users the latest information anyway. Cloudflare all the way 🙂

Our API

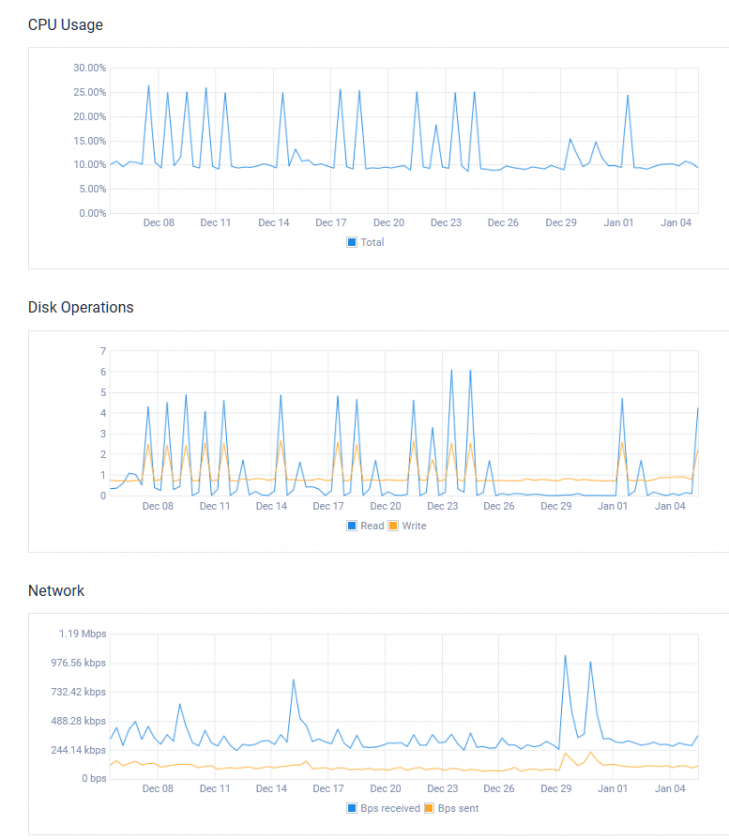

To give us the most control over caching database reads, and also to avoid racking up bills in Vercel, we have a separate $5 server (yes, seriously) on Vultr that runs our API.

The purpose of this API is to connect our front-end website (on Vercel) to our database. That’s all it does.

And because we cache everything so heavily (in order to reduce database costs), the API server can be extremely basic.

It’ll be a long time before this server becomes inadequate for our needs, and before that happens it’ll be trivial to migrate the server snapshot to a beefier server, or load balanced network of servers more likely.

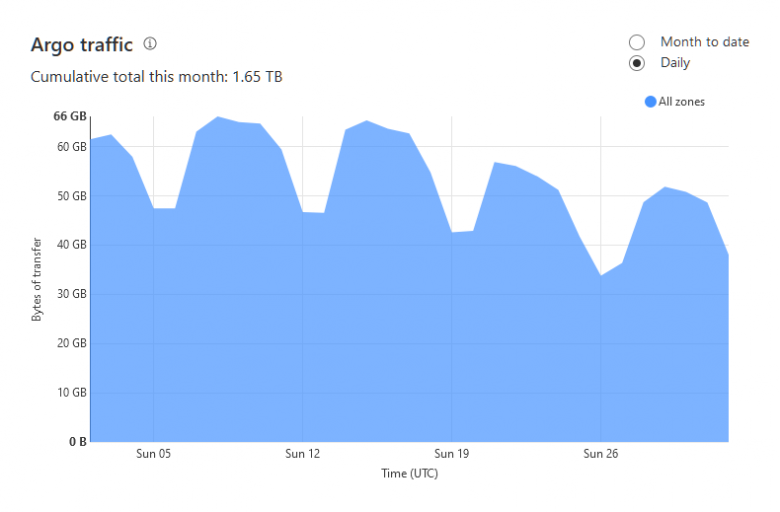

Argo

One of the main reasons our database and API costs are so low (relative to what they could be without Cloudflare) is because of the extremely high cache ratio, 93%.

It’s a direct effect – the more we can get Cloudflare to cache, the less often our API has to fetch things from the database itself, the lower our usage costs.

Argo is an optional extra service from Cloudflare that does two things:

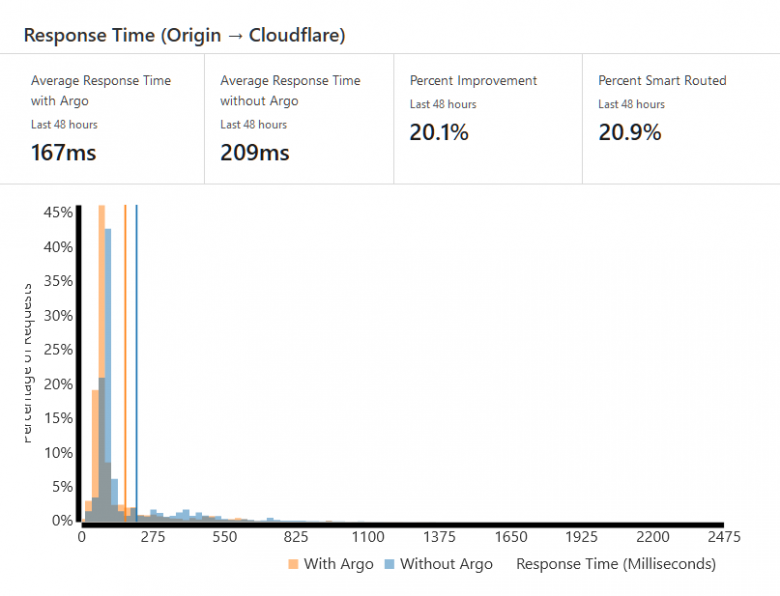

- Optimizes DNS routes to improve latency (this helps our site feel faster to users).

- Adds an additional layer to the cache, so all global traffic goes through only a handful of their biggest data centers before splitting up to their hundreds of edge nodes.

This second point is what we care about.

Using our example from earlier: With Argo, if someone in London first downloads a file, Cloudflare will now cache that file not just for London, but for the whole of Western Europe.

This results in a much higher cache ratio (going from ~75% to 93% with our configuration), but comes at a cost: You now pay per GB of traffic going through the Argo network.

This is why we have separate .com and .org domains – we have Argo enabled on the .com domain which runs the website with relatively low bandwidth, and then we leave Argo disabled on the .org domain which serves the 80TB of download traffic.

Argo costs us about $160 per month currently, by far the biggest single expense. But remember it saves us a huge chunk of database usage fees, by my back-of-the-napkin math around $250.

Plus we get the benefit of improved latency, less load on our API server, and less Vercel usage.

Image Hosting

Finally, the last piece of the puzzle is mostly separate from everything else.

All of our images shown on the website (thumbnails, renders, previews, etc.) are stored on Bunny.net – another budget CDN.

Bunny.net doesn’t just store our images though, they also have an optimization service which allows us to dynamically resize and compress images for the website. This greatly improves both user experience (faster page loads) and our own workflow (we only need to upload a single PNG and it’s available in any size and format).

This costs us about $27 per month, depending on traffic.

Total

- DNS, caching & egress: Cloudflare (2 domains) – $40

- Caching: Cloudflare (Argo) – $160

- Asset storage: Backblaze B2 – $11

- Web hosting: Vercel – $20

- Database: Firestore – $100

- API: Vultr – $5

- Image hosting & optimization: Bunny.net – $27

- Domains: Cloudflare – $4

- Email fees: MXroute – $3

That brings the total to about $370.

A lot of these costs are based on our usage of these services, which is directly affected by traffic on the website.

These costs will likely grow as we grow, which is good. If you’re reading this in the distant future, just take a look at our public finance reports to see how things have changed 🙂

Oh and about those finance reports, the “Web Hosting” category there is a bit higher than $370 as it includes some other servers and services we use to assist in managing our team and uploading assets – those aren’t strictly related to running the polyhaven.com site itself, but rather Poly Haven the company.

Future Thoughts and Contingencies

Our infrastructure is intentionally quite modular, so that we don’t rely on any service too heavily. Even Cloudflare could be replaced if we had to.

Our API server is currently our weakest single point of failure – I don’t have any experience running a public API. We want to allow other people to depend on the API to integrate our assets with their software, so this area needs some research and improvement.

Google Firebase is nice and convenient, but it is quite expensive. We could investigate some other managed database options in future.

One of our biggest cost savings is with the Cloudflare + Backblaze Bandwidth Alliance. The plan, should this cease to exist at some point in the future, would be to set up our own network of load balanced dedicated servers to handle the traffic. This sounds scary, but we actually did this in the past and it worked quite well. It’s a pain to manage for sure, but potentially just as cheap.

Hopefully this monster of a blog post answers some of your questions and helps you figure out the architecture you need for your own project, or was at least interesting to read 🙂

Would love to know more about how you run your website on Vercel

Do you cache content in CF with Vercel as the source of truth?

Do you ever need to expire a CF cache when the content updates in Vercel?

Or is it only ever long running content?

I know that Vercel has it’s own caching strategy, and it’s possible to expire cached content there, but it probably would become expensive for a large site?

Good question 🙂

Vercel doesn’t really want you to stick CF in front of them, since it adds another hop and theoretically some latency, but that’s only true for the CF-uncached (7-15%) requests. The other 85-93% of requests see *significantly* better performance if they don’t have to bother Vercel (and save you usage fees).

The catch is managing the caching layer. Every time you push to your repo and Vercel automatically deploys it, you need to flush your entire cache. If you don’t users will experience a lot of visual glitches on the site, since some pages are looking for some scripts that no longer exist.

My workflow is this:

1. Push (triggering a build on Vercel)

2. While it’s building, set your CF pagerules to bypass the cache

3. After Vercel has deployed the changes, purge the CF cache completely.

4. Wait 30s and set your pagerule back to “Cache Everything”.

I don’t every touch the cache controls in Vercel, I didn’t know there were any 🙂

Hey Greg,

Why are the 2-4 steps necessary? Wouldn’t it be enough to just wipe the cache after the new version is deployed on Vercel?

It’s complicated, and I don’t fully understand it either, but no. I tried this first of course, and it helps a bit, but you’ll still see a metric ton of errors and reports from users.

Nice post Greg!

We to have tried using CF in front of Vercel but they really don’t play well.

Interesting to see your approach by bypassing, clearing, then re-enabling the cache. I wonder, could you automate this by:

1. Creating a Vercel integration that send webhook requests for each deployment phase (created/complete)

2. Create CF workers (for each deployment life cycle phase) as the destination of the webhook POST requests

3. Using CF’s API, bypass, purge and re-enable programattically

Extra: You could chuck in some sort of notification request to something like Slack on completion

Anyway, food for thought

Thanks for the detailed post!

Have you considered Cloudflare Pages instead of Vercel?

I know of it but didn’t look too deeply into it no. Any idea if or how it could be a better option?

Edit: important next.js features for us are ISR and SSR. Some quick googling suggests these may not be supported on CF pages, but it’s hard to know for sure without testing.

Do you do these steps manually or do you have them automated with CI/CD?

Manually atm. We only deploy a stable version every couple of weeks.

https://i.postimg.cc/v8SnqyZm/image.png

Cloudflare is also my best friend, total of my monthly server cost is even below $40 with this traffic

What’s your website?

Thank you for the article. I wonder why you are not using the Cloudflare Image Optimization tools that they provide in the paid plans?

Because it costs an arm and a leg 🙂

I did use CF image resizing for [these small thumbnails](https://file.coffee/u/3w5T9vxgEZO-DR.png) a few months ago as it was more convenient than uploading them to bunny.net at the time, but those few images alone ran us up a $300 bill in less than a month before I noticed what was happening. So bunny.net is significantly more affordable for image resizing.

It makes sense eheh 🙂 so why not use Next Image? It’s to use fewer resources on Vercel? I use Nuxt so I’m not sure how Next Image operates, but I believe should be more or less the same way.

One last question, I have. Since you are caching the API when you submit new content, how does Cloudflare know to clear that endpoint?

Really thank you for this amazing post.

First we need to store images on a CDN that’s separate from the main site repo, since this gets added to all the time and the site code doesn’t change often. You can probably use Next Image for this, but it’s simpler to stick with one service to host and optimize the images.

When adding new assets, our backend tool will automatically clear all relevant caches via their API.

Could you share a a bit more about bunny.net. They say they do device optimized image serving using device detection. How would caching work here? Or are you just using basic image resizing and caching?

Bunny does their own caching, cloudflare is not involved (other than DNS if you choose to use your own domain).

The optimization is basically this: Whatever file you’ve uploaded (PNG/JPG…) will be served as a WEBP image if the browser supports it. If not, then the original file format is served instead.

Great post!. One question: How much data are you storing on Backblaze? The assets that are cached are just in the cache, not in the database or the entire asset library is stored in the cloud object storage and then repopulated in the cache?

Everything is stored on backblaze, that’s our ground truth. It’s about 2TB. Cloudflare’s cache is dynamic, they cache each request as it comes.

Hi, you mentioned the fear/potential of the Alliance bandwidth thing ending… did you take a look at Dropbox advanced, as they offer basically unlimited storage for roughly 45$ a month with 4 TB bandwidth per day? (https://help.dropbox.com/files-folders/share/banned-links?fallback=true)

For pure storage I think that is an unbeatable price tag, compared to pure storage cost for backblaze. But I think you can’t up the bandwidth per day limit.

May I translate this blog and re-post it somewhere else ?

Sure 🙂 as long as you link back to this post.

Could you please shed some light on how scalable is this approach?

Scalability was my main concern from the start, coming from numerous stability issues on the old haven sites.

Almost everything is hosted on some cloud service that is inherently scalable by their design (e.g. vercel’s serverless functions). As they charge per usage, they actually want you to scale indefinitely.

The API is the exception here, but only because of my lack of experience with APIs in general. For now it’s not a priority to look into that, as the $5 server seems to do just fine. I’d love some suggestions as to how I could host the API in some kind of similar serverless manner. It’s a simple express app, without any kind of authentication to worry about.

Thank you, Greg! It’s genius design 👍

Amazing article! Could you elaborate a little bit more about the API server in Vultr? E.g. tech stack, operations and purpose of APi

It’s a basic Express server, the source is all here if you want to dig deeper: https://github.com/Poly-Haven/Public-API

Thanks for this! Amazing that this all runs so cheaply!

Thanks for documenting your stack for others to learn from.

How would your site/service perform if any one of your 5 or 6 providers have an outage? In other words do you need all of them to be up simultaneously, or will your service and site degrade gracefully if any of the providers were having an issue.

Bunny has had outages before, when this happens then there are no images on the site.

For the rest of them, there’s at least some short-term protection with the cloudflare cache layer, but if CF itself goes down then we’re dead in the water (along with half the internet). So far in the last year only Bunny has had issues afaik.

Hi, It’s a nice read.

I’m curious about the cache-control headers you used in your Next.js config and on cloudflare.

How you cached remaining 15% uncached from cloudflare using blackblaze ?

I thought Cloudflare had limitations for their $20 plan on how much egress you could do a month… It seems in practice they don’t really enforce that below a certain amount???

Nothing to add, just wanted to say thanks for writing this up.

Very interesting. Why don’t you use CloudFlare pages instead of Vercel? It allows you to run NextJS app similarly to Vercel and for free.

And which premium features from CloudFlare do you use most? I see caching static assets doesn’t require them, only the free features are enough.

Thx for the great article!

When you use integration Cloudfare -> Backblaze did you use limit “Class B transactions cap” ? coz we were trying to integrate this and got:

{

“code”: “download_cap_exceeded”,

“message”: “Cannot download file, download bandwidth or transaction (Class B) cap exceeded. See the Caps & Alerts page to increase your cap.”,

“status”: 403

}

We didn’t have to set any limits/caps. I have no idea what could cause that sorry!

We don’t set any limits. We just received notification: “Class B Transactions Cap Reached 100%” . Start digging on this directions and in the article: https://www.backblaze.com/blog/free-image-hosting-with-cloudflare-transform-rules-and-backblaze-b2/ there it says: “Transactions

Each download operation counts as one class B transaction. …

By serving your images via Cloudflare’s global CDN and optimizing your cache configuration as described above, you will incur no download costs from B2 Cloud Storage, and likely stay well within the 2,500 free download operations per day.

”

it sounds like you were billed for each B transaction from backblaze. How it looks in our console: https://photos.app.goo.gl/xkaLBpu7CVJ8bkxx9 .

Do you have the same?

IDK if you want to avoid being too locked into Cloudflare, but they came out with a serverless SQL database that can be used independently of their Workers service called D1 that appears to be ridiculously cheap. It appears to include the first 25 BILLION reads a month, and then it’s something like 600x times cheaper then Firebase after that. Definitely worth checking out https://developers.cloudflare.com/d1/platform/pricing

They also have a serverless code runtime called Workers that is pretty interesting, but at your scale would probs cost more than your VM by quite a bit.

Thanks for letting me know! I’ll check it out 🙂